Dance to Music Expressively: A Brain-inspired System Based on Audio-semantic Model for Cognitive Development of Robots

Image credit: from the paper Fig. 1

Image credit: from the paper Fig. 1

Dance to Music Expressively: A Brain-inspired System Based on Audio-semantic Model for Cognitive Development of Robots

Abstract

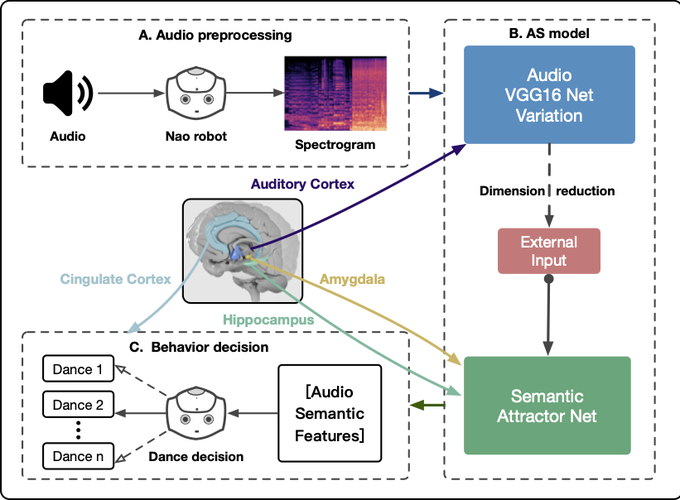

Cognitive development is one of the most challenging and promising research fields in robotics, in which emotion and memory play an important role. In this paper, an audio-semantic (AS) model combining deep convolutional neural network and recurrent attractor network is proposed to associate music to its semantic mapping. Using the proposed model, we design the system inspired by the functional structure of the limbic system in our brain for the cognitive development of robots. The system allows the robot to make different dance decisions based on the corresponding semantic features obtained from music. The proposed model borrows some mechanisms from the human brain, using the distributed attractor network to activate multiple semantic tags of music, and the results meet the expectations. In the experiment, we show the effectiveness of the model and apply the system on the NAO robot.